Key Findings

- The Center for the Study of Organized Hate (CSOH) documented and analyzed 128 X posts targeted at Indians broadly within the Western context.

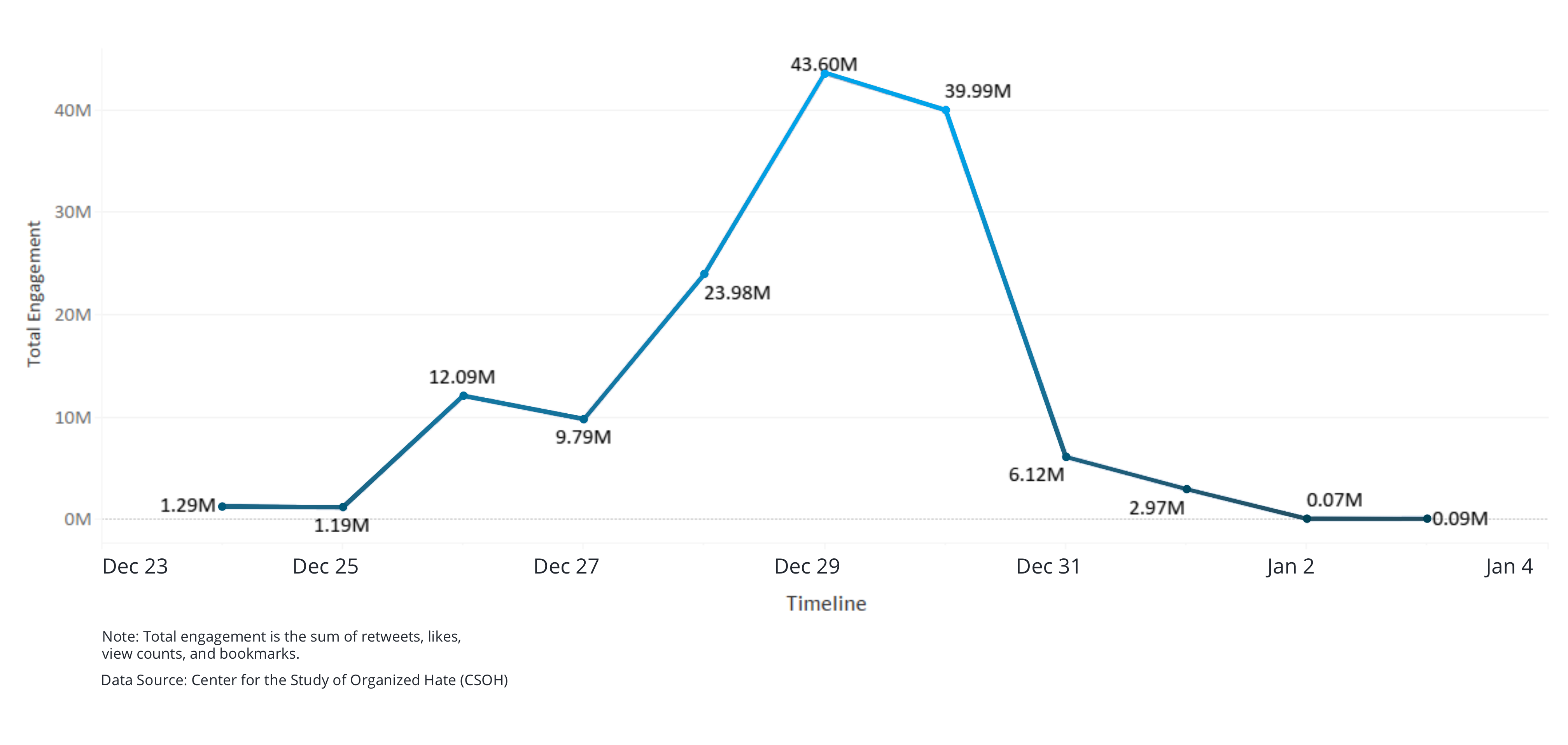

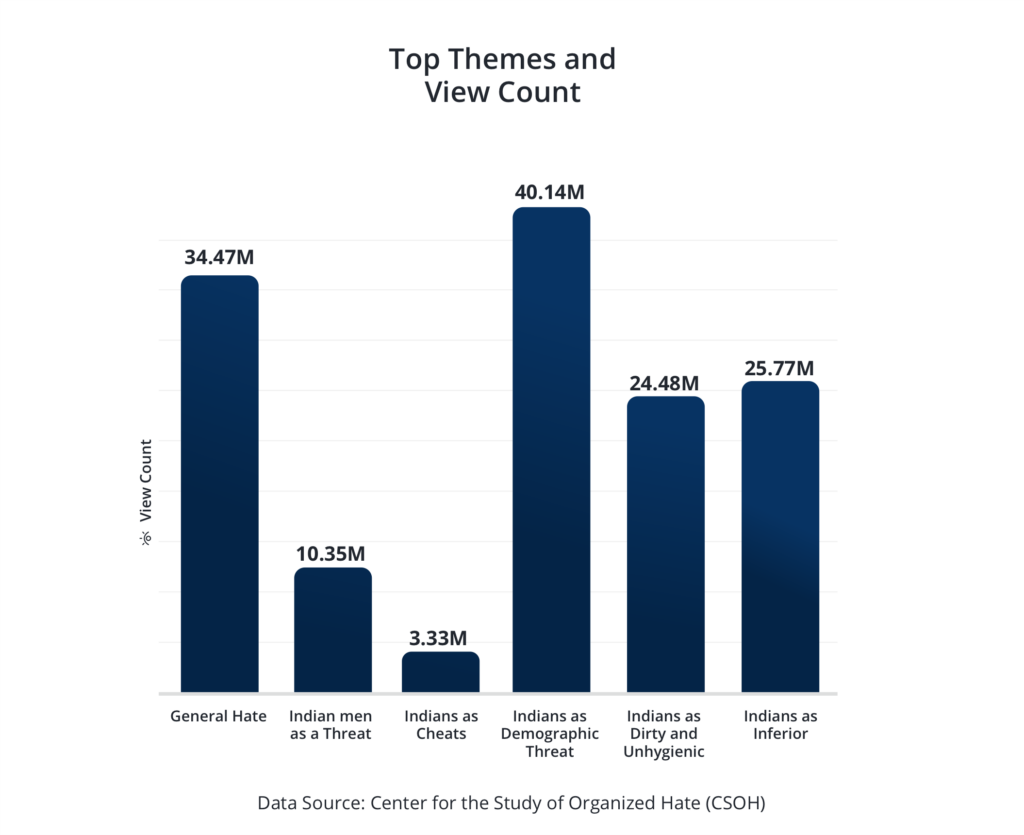

- All posts in our dataset received a total of 138.54M views on X as of January 3, 2025. 36 posts received over a million views, 12 of which claimed Indians to be a demographic threat to white America.

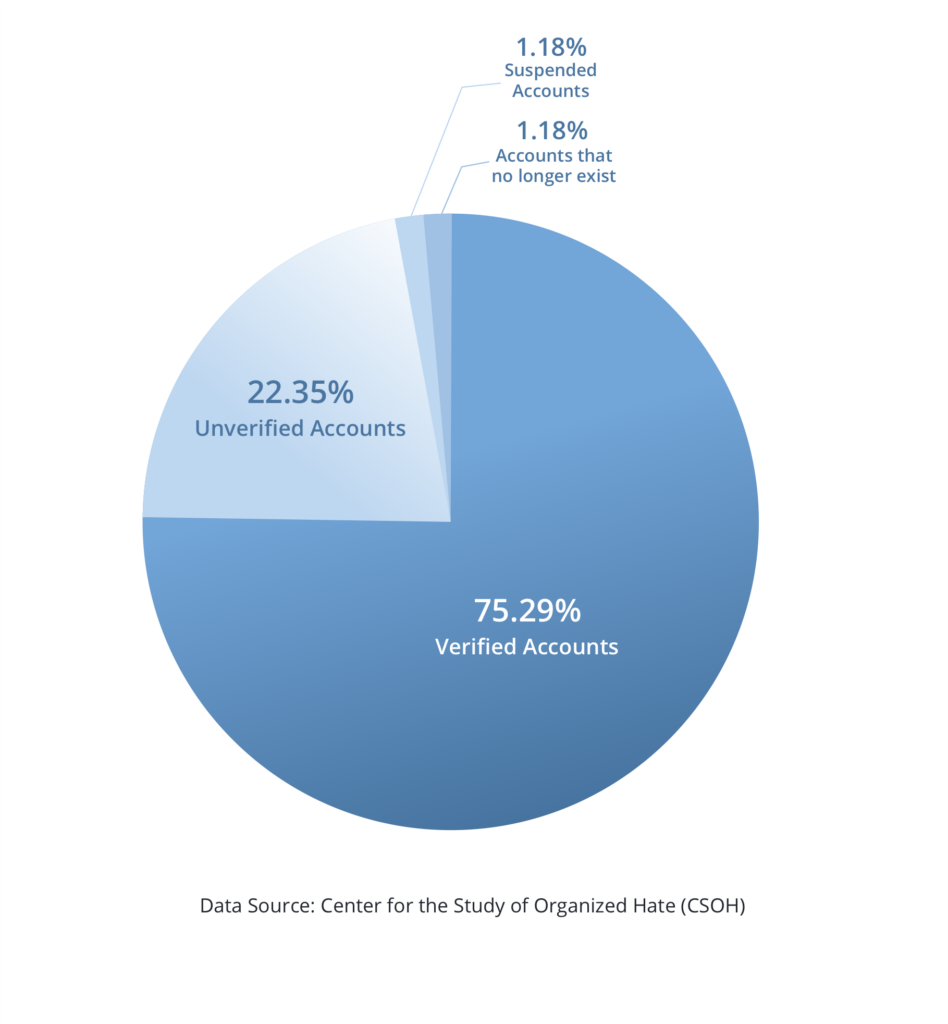

- 64 of the 85 X accounts documented in our dataset are subscribed to X Premium and display a blue badge on their profiles.

- The posts were in violation of X’s policies on Hateful Conduct. Violations included Incitement through ‘inciting fear or spreading fearful stereotypes about a protected category,’ slurs and tropes, and dehumanization.

- As of January 3, 2025, 125 posts in our sample remain active. Eight posts have been marked as sensitive, and one post remains active with limited visibility due to potential violations of X’s rules against Hateful Conduct.

- Only 1 of 85 accounts in our database has been suspended by X.

- Notably, these attacks were not exclusively aimed at Hindus of Indian or American origin but extended to all those perceived as being of Indian descent, including Sikh community members.

Recommendations

Recognition of Anti-Indian/South Asian Racial Slurs: X’s policy on hate speech prohibits “targeting others with repeated slurs, tropes or other content that intends to degrade or reinforce negative or harmful stereotypes.” Enforcing this policy requires regular updates and monitoring of hateful slurs that emerge in different contexts. The platform did ban a number of accounts associated with the racist Groyper movement, but the lack of consistency in content moderation and enforcement of existing policy means that many suspect that the accounts were banned simply for disagreeing with the site’s owner.

Expanded and Refined Definitions: The report shows that a number of South Asia-focused racial slurs are widespread on the platform. Some terms like ‘pajeet’ are relatively recent, while others like ‘Curry’ have been in long use and have undergone some destigmatization. Content moderation actions require active monitoring of the use of racialized terminology, as well as an understanding of the positionality of the speaker. X must ensure that its content moderation policy is aware of the nuances of such language on and beyond the platform.

Establishment of an Advisory Council: The dissolution of Twitter’s Trust and Safety Council in December 2022 harmed the platform’s reputation and ability to respond to the evolving realities of online hate. X must create a new advisory council to consistently monitor the platforms and issue actionable recommendations.

External Stakeholder Engagement Framework: Stakeholder engagement is critical for all platforms, and X must proactively reach out to scholars, activists, and community leaders for advice on hate speech trends and policy updates. This must be done in a transparent manner for both users to understand policy changes, and external stakeholders to be able to monitor the impact of their recommendations.

Use of Community Notes Proactively: X’s Community Notes feature allows contributors to add context, such as fact-checks under a post. This could be used to contradict non-factual and fabricated claims regarding immigrants and temporary workers, including crime statistics, percentage of jobs taken by immigrants, conditions, and restrictions on short-term visas, student visas, etc.

Community Empowerment: While it is inevitable that any platform will have some degree of discussion on immigration policies of various countries, platforms can play a proactive role in ensuring that such discussions are informative rather than hateful. It is important for platforms to specifically intervene as immigrants (and refugees) may not be clearly identified as protected categories in many countries.

Community Reporting Tools: Creation of a specific category of ‘vulnerable migrants, refugees, or displaced persons,’ which would prohibit hateful, violent, or other prohibited expressions aimed at them.

Reporting Intersectional Hate: X’s current reporting tools do not allow for flexibility in reporting intersectional forms of hate. As an example, a post could insinuate that Indians are unhygienic (Hate) and include a call for ‘cleaning’ the country (Violent Speech). Users can only report one violation at a time, which means that in the case a user fails to correctly identify the specific violative clause, the piece of content may continue to be hosted on the platform.

Transparency: Many jurisdictions require platforms to publish transparency reports (Digital Safety Act in the European Union, Online Safety Act in the U.K., etc.). X could use the opportunity to clarify the extent of anti-Indian/South Asian hate and its actions regarding the moderation of actionable content. X leadership could also decide to proactively provide additional context of trends in violative content especially in relation to marginalised groups and communities.

Counter-Speech: Counter-Speech is defined as speech that challenges hateful narratives. Platforms have long sought to promote counter-speech as a better solution to hateful content rather than bans and restrictions on violative content. X must identify a coherent Counter-Speech strategy that aligns with the company philosophy. This may include the following:

Enhanced Discoverability: X should identify content that aligns with its counter-speech strategy and enable visibility for such content in the ‘For You’ section, especially for users who openly deconstruct hateful narratives.

Premium Features: One of the most prominent changes to the platform has been the transition away from legacy verification. X introduced X Premium as a paid verification feature, which has been repeatedly criticized for amplifying hate speech, spam, and disinformation. However, extending premium features for counter-speech initiatives at discounted rates or even for free could help the platform.

Enforcement Options: Our report shows that 64 of the 85 aforementioned X accounts were verified (i.e., subscribed to X Premium). This brings into focus the potentially distorting effect of the Premium service on the platform, as hateful voices may be amplified. It has been reported that some accounts posting hateful content were banned or restricted, but, as previously noted, this has been rather inconsistent. Must improve its range of enforcement options:

Disqualification from Premium Services: Accounts that repeatedly post hateful or extremist content must be barred from X Premium. Moreover, posting and amplifying hateful or extremist content should be restricted for verified accounts. Pre-verification checks should ensure that the user doesn’t have a history of hateful or extremist conduct.

Creator Monetization: Currently, extremist and hateful content are categorized under ‘Restricted Monetization,’ this must be upgraded to the level of ‘Prohibited Monetization.’

Recommender Systems: Recommender Systems form the basis of most social media platforms, which means that extremist and hateful content can be promoted by users exploiting the mechanism. X must perform a systematic review of its recommender systems, and publish its approach towards preventing recommendation of hate and extremism.